“Don’t call it a button, don’t call it a button, don’t call it a button,” I repeated in my head as I prepared to talk to three Apple executives deeply involved with the creation of Camera Control, the iPhone 16’s new photography and Apple Intelligence interface that may look and feel like a recessed button, but which is actually something far more interesting.

To their credit, Richard Dinh, Apple VP of iPhone Product Design; Apple human interface designer Johnnie Manzari; and Piyush Pratik from iPhone Product Marketing, never directly called Camera Control a button. Instead, they made a persuasive argument about what it’s not.

This also happens to be a pivotal moment for Camera Control, which, while introducing iPhone 16 owners to an entirely new way of communicating with the cameras on their handset, had been lacking two core features: focus and exposure lock, and direct access to Apple Intelligence’s Visual Intelligence feature. Those two updates arrived this week as part of the iOS 18.2 update, and they both noticeably elevate the experience of using this non-button that iPhone owners are still getting to know.

Camera control is kind of unlike anything we’ve previously done

Even since Apple introduced Camera Control with its iPhone 16 line in September, I’ve suspected that the new ‘button’ contained multitudes. After all, it can recognize physical presses, pressure, and gestures. Each of those interactions accesses another part of the camera system (every lens, exposure, photo styles, and more). While I might joke that it’s a button, because it can be pressed, no other button on the iPhone offers anywhere near this level of functionality.

“Camera control is kind of unlike anything we’ve previously done, and behind that delightful easy-to- use experience is tons of interesting engineering details, including a bunch of Apple first innovations,” said Dinh, who, like other Apple execs I spoke to, took pains to describe the engineering feats necessary to squeeze so much technology into such a tiny space.

Central to Apple’s Camera Control efforts was something akin to the principle doctors strive to adhere to when treating a patient: first, do no harm. Apple wanted this new physical control on its iPhone 16 line, but it didn’t want to change the form or feel of the device in order to accommodate it.

“Because the technology behind the architecture is designed in harmony with the product, the design utilizes only the essential minimum space needed,” noted Dinh. Achieving this, however, wasn’t easy.

In one instance, Apple developed a new ultra-thin, flexible circuit and connector to carry signals through the iPhone 16’s water-resistant and dynamic seal.

“We had to solve some unique engineering challenges to pack everything we wanted in Camera Control into a compact space without impacting the rest of iPhone 16 and everything at the micron level mattered more than ever,” explained Dinh.

We had to solve some unique engineering challenges to pack everything we wanted in Camera Control into a compact space without impacting the rest of iPhone 16.

Apple executives told me that every iPhone 16 gets custom treatment. Sounding somewhat obsessive, Dinh described this individualized process.

“Every unit is tuned for tactility, force, and touch sensing. To balance even the smallest variants in physical dimensions of tolerances, we take topological scans of the key components for every device and individually optimize the assembly process using this data.” That sounds like a lot, but Dinh wasn’t done.

“Even the sapphire crystal depth of camera control relative to the band around the product is individually set in place, and then micro-welded to lock in that consistent flush design for every device.”

Hearing Apple’s attention to detail isn’t surprising. The tolerances on an iPhone are undeniably exquisite. Still, I saw things differently once Apple revealed the level of technology sandwiched into Camera Control’s cramped space.

Inside Camera Control are four distinct technologies. There’s a high-precision tactile switch, the part that moves when you fully press Camera Control, which is used to commit and take a photo or shoot a video. “The beauty of a physical switch is that it lets you access and use your camera and visual intelligence even in situations when you might have wet fingers or you’re wearing gloves,” Dinh told me.

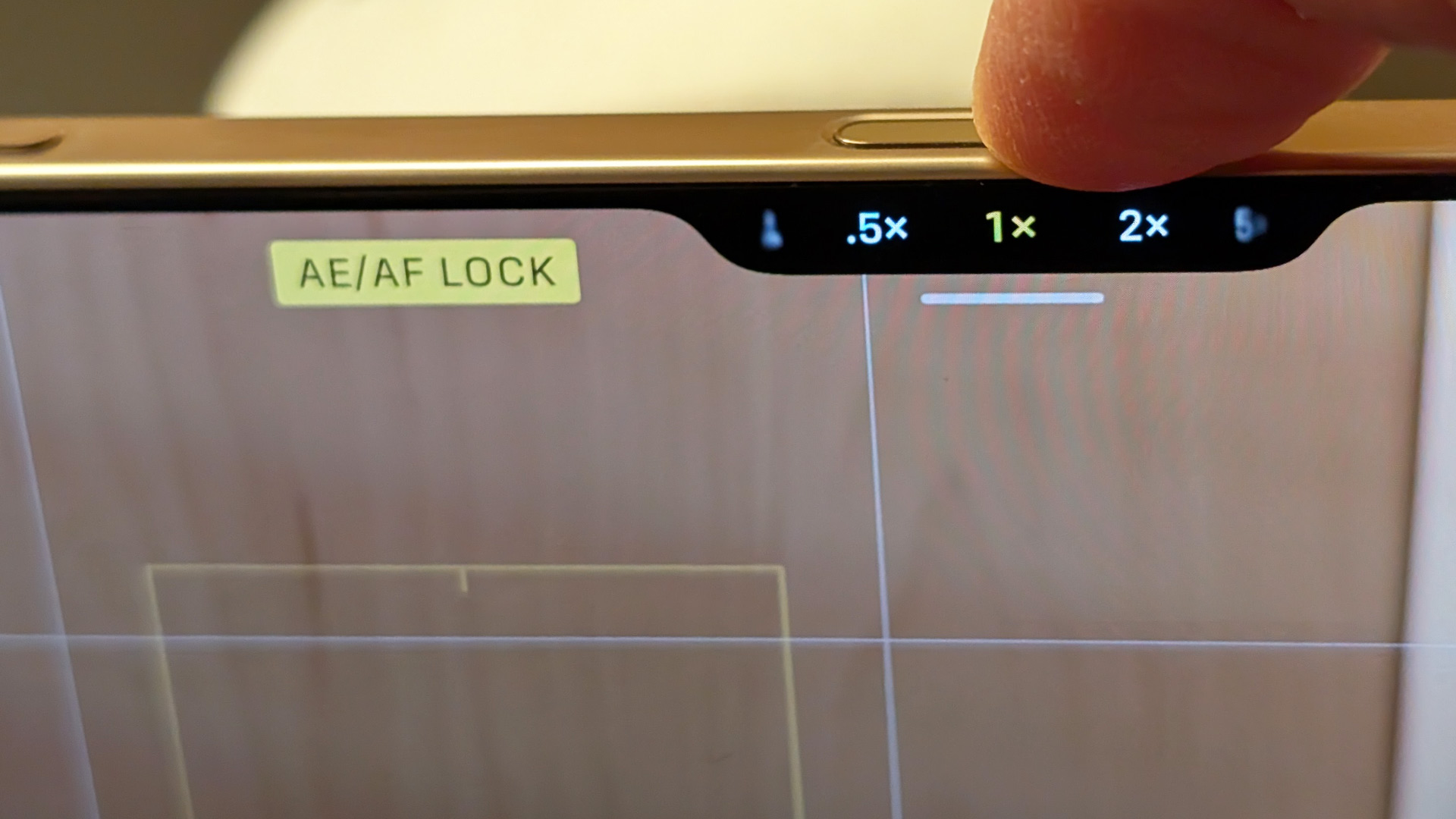

To detect light touches, like the light double press to access camera settings and the light long-press to lock in focus and exposure, there’s a high-precision force-touch sensor.

Right behind the Sapphire crystal that you’ll touch and brush with your finger is a multi-pixel capacitive sensor that will recognize all your Camera Control gestures.

Finally, there’s the haptic interface because you won’t know those light presses are working without a gentle vibration that feels like a button press. Dinh explained that the effect is partially achieved through a collaboration between Apple’s A18 and A18 Pro chips, the capacitive sensor, and Apple’s Taptic engine.

Camera Control is slightly recessed on the iPhone 16 body, but that’s not necessarily how it rejects false clicks and touches. Apple programmed the touch sensor to read force signals and information from the tactile switch to reject false swipes. The phone also uses telemetry to tell it when it’s in your pocket or lying down – not necessarily optimal spots for photo taking – and ignores false touches in those situations, too.

Okay, okay, this is perhaps not just a button.

Despite all that technology, to the untrained eye, the iPhone 16 Camera Control is another physical accouterment on a handset slab that once seemed intent on discarding as many buttons and ports as possible (RIP Touch ID, 3.5mm headphone jack, and SIM card slot).

I tried to imagine the conversations inside Apple that sparked this notion of a new way to control photography and videography. Did it start with an Apple engineer shouting, “Eureka! Another button is all we need to perfect our near-perfect iPhone!”

“We didn’t necessarily begin by saying, ‘We should add a button to the iPhone.’ We started talking about experiences that we could enable,” said human interface designer Johnnie Manzari.

It’s hard to know exactly how a massive tech company like Apple operates without being on the inside, but I like to imagine that major decisions like this, where you’re considering adding a new hardware feature to an iconic product, are presented at the very top, to Apple CEO Tim Cook.

The team wouldn’t tell me if Cook had a say (though I bet he did), but they did their best to illustrate how Apple approaches the introduction of almost any new feature.

“I couldn’t share with you all the details of the debates that happened whenever we talk about something new like this,” said Dinh, “but I think that one thing you should know is, obviously, we all have a lot of great ideas, and it does take that level of debate and collaboration across all the teams to decide, “Yeah, Rich, let’s go add this piece of hardware in.”

We didn’t necessarily begin by saying, we should add a button to the iPhone. We started talking about experiences that we could enable

Inside Apple, decisions about adding new features start with a desire to fix or improve something.

Manzari offered this example, “We talked about how we wanted to make this really great, fast, and easy for capturing photos and videos. And I don’t think it’s enough to just walk into a meeting and tell people, ‘It’s going to be great for capturing photos and videos.’ They’re going to ask you, these leaders across the company, are going to ask you, ‘Tell me specifically, what does it mean to capture a photo or video in terms of capability, in terms of giving the customers what they want? Sometimes, speed and quality are at odds with each other. At times, they may be diametrically opposed and you can do something fast at low quality or you can do something slow, at high quality. Can you tell me very specifically with this product that you’re building, what those trade-offs would be, how you think about them?’ and so on.”

I swear, I could almost hear Tim Cook’s voice, but Manzari reminded me, “There are many other people in the company as well that are obviously involved in these processes and all of them are trying to understand how to solve customer problems.”

Camera Control is, in a way, a magnet for all camera-related features (and ones outside of photography and videography like Visual Intelligence), and, as such, decisions about features and integration are called on the expertise of multiple departments at Apple.

To be able to record a video with a long press of Camera Control required access to a number of low-level iPhone systems. “We had to go sit with the Silicon team, the ISP (Image Signal Processing) team, and camera architecture, to understand how quickly we could shift those systems over so that when the person held the button they would start to record video,” explained Manzari.

These distinct teams may typically focus on their propriety zones, but in Manzari’s view, “As a human interface team, it’s impossible to design the experience in a silo.”

While I understood the level of innovation and need for cross-department collaboration, I still wondered where Apple committed to Camera Contol in the iPhone 16 development cycle.

I’d previously been led to believe that there’s a roughly 18-month development cycle for products like the iPhone, and physical features like Camera Control would be locked in fairly early.

Manzari, though, explained that when it comes to features, Apple takes the long view, and they’ve been pulling on some “threads,” as they termed them, “for a very very long time.”

Before I could ask about other persistent tech themes in Apple’s long-term vision, Manzari told me, “We can’t talk about other threads that we’re still pulling on but you know, we’re in meetings every day…about all sorts of other things we’re interested in and trying to figure out how to solve those problems.”

Speaking of long threads, when I asked why Camera Control initially shipped without Exposure and Focus Lock, Manzari said, “We always say, we ship things when they’re ready.”

Piyush Pratik from the iPhone Product Marketing team insisted that with Camera Control’s layered approach “there’s something for everyone….For everyday users, just the ability to have access to quickly launch your camera using a tactile switch is huge….For camera enthusiasts and pro photographers, the ability to go deeper, have those more nuanced controls with the light press and the double light press and now things like, you know, AE and AF lock,” he said.

I haven’t used Camera Control much since the launch, and I often forget it’s on my iPhone 16 Pro Max, but the recent update has inspired a recalibration. As an amateur photographer, I’ve used countless DSLRs and point-and-shoot cameras, all with focus and exposure lock. It’s a powerful tool not only for well-exposed and clear imagery but also for composition. As it is, it turns out, in the iPhone 16.

I can now use the long light press to lock the exposure, focus on my subject, and then shift the camera frame to recompose the photo into something, perhaps, more elegant. The benefit is that my subject remains clear and well-exposed, and the background is now softly out of focus. I’ve also noticed that in moments where I do not want to remove my gloves but still want to grab a photo or video, I’ll turn to the new Camera Control.

We do believe strongly that the current location and size of the camera control offers the best balance of use in both worlds.”

One thing I noticed is that Camera Control is, at least for me, much more comfortable to use in landscape mode, like a classic camera, than in portrait mode.

Apple studied this issue and, according to Pratik, “After doing a lot of deliberation, a lot of studies and so on, we do believe strongly that the current location and size of the camera control offers the best balance of use in both worlds.”

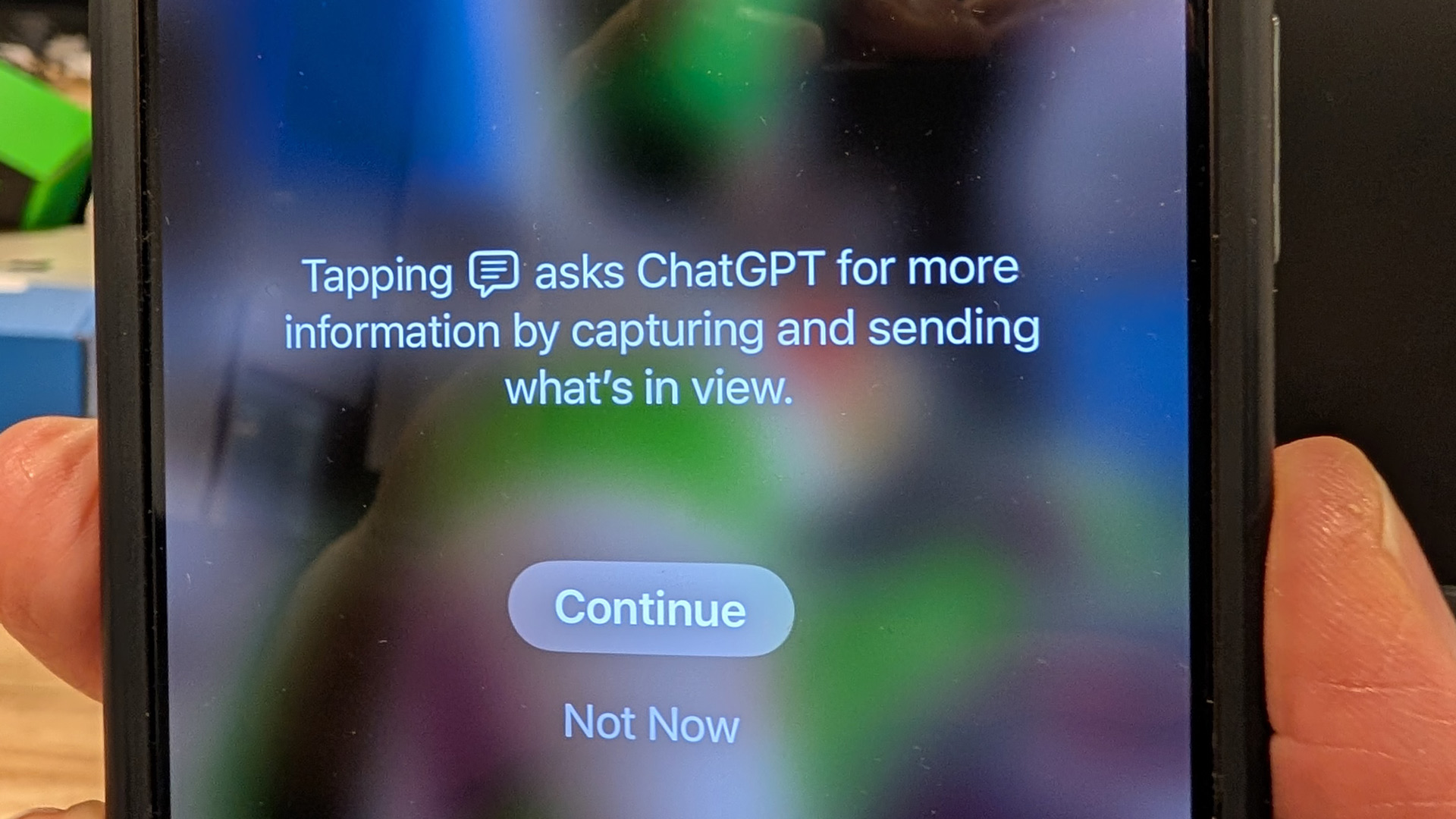

That may be more so now that Visual Intelligence is firmly ensconced in Camera Control. A long hard press of the non-button launches Visual Intelligence, which lets you snap a photo and then ask Google Search or ChatGPT to tell you more about it. The former, in particular, is quite effective at identifying any object I show it.

It’s also encouraging that Apple designed Camera Control with third-party apps in mind. Apps like Kino and Blackmagic are already taking advantage of the new iPhone 16 hardware features.

As for what comes next, Apple execs laughed when I suggested that future Camera Controls would feature a tiny OLED display, but there are already plans for more near-term updates like enhancing the Visual Intelligence features to identify different dogs and using it to recognize information from a flyer and add those details to your calendar.

By this point in the conversation, I’d started thinking of Camera Control almost as its own little mini-computer inside a larger computer. If not that, it’s certainly a physical doorway to so many Apple features.

If I was still not convinced that Camera Control isn’t a button, Pratik had some parting words: “We decided to call it the Camera Control and it’s not a button, right? So it wasn’t like, ‘Hey let’s add a button and see what the button does,’ because it is unlike any button that we have developed, and, as you heard Rich say, it is unlike anything we have ever developed. So the fact that it is touch-sensitive, pressure sensitive, and there’s so many experiences that this tiny real estate is unlocking for us, just makes it so much more than a button, which in summary, it’s a Camera Control.”

Comment here